Cost Aspects of LLM Integration

Intended audience: DEVELOPERS ADMINISTRATORS

AO Platform: 4.3

Overview

In our latest product release, we are enhancing the AO Platform with LLM integration, enabling users to ask free-form questions, thus simplifying the user experience. LLM is also used for entity extraction in Contract AI and Easy Answers (Ex: automatically identifying location, product names, contractual date, etc.), improving data accuracy and efficiency. With the addition of Retrieval-Augmented Generation (RAG), we now offer advanced Q&A capabilities on unstructured data (eg, PDF) documents. Furthermore, Easy Answers supports context-based conversations, allowing users to engage in more natural, dynamic interactions where the system retains and builds upon previous exchanges. This leads to more relevant and personalized responses, enhancing the overall effectiveness of the platform.

Usage and Activity

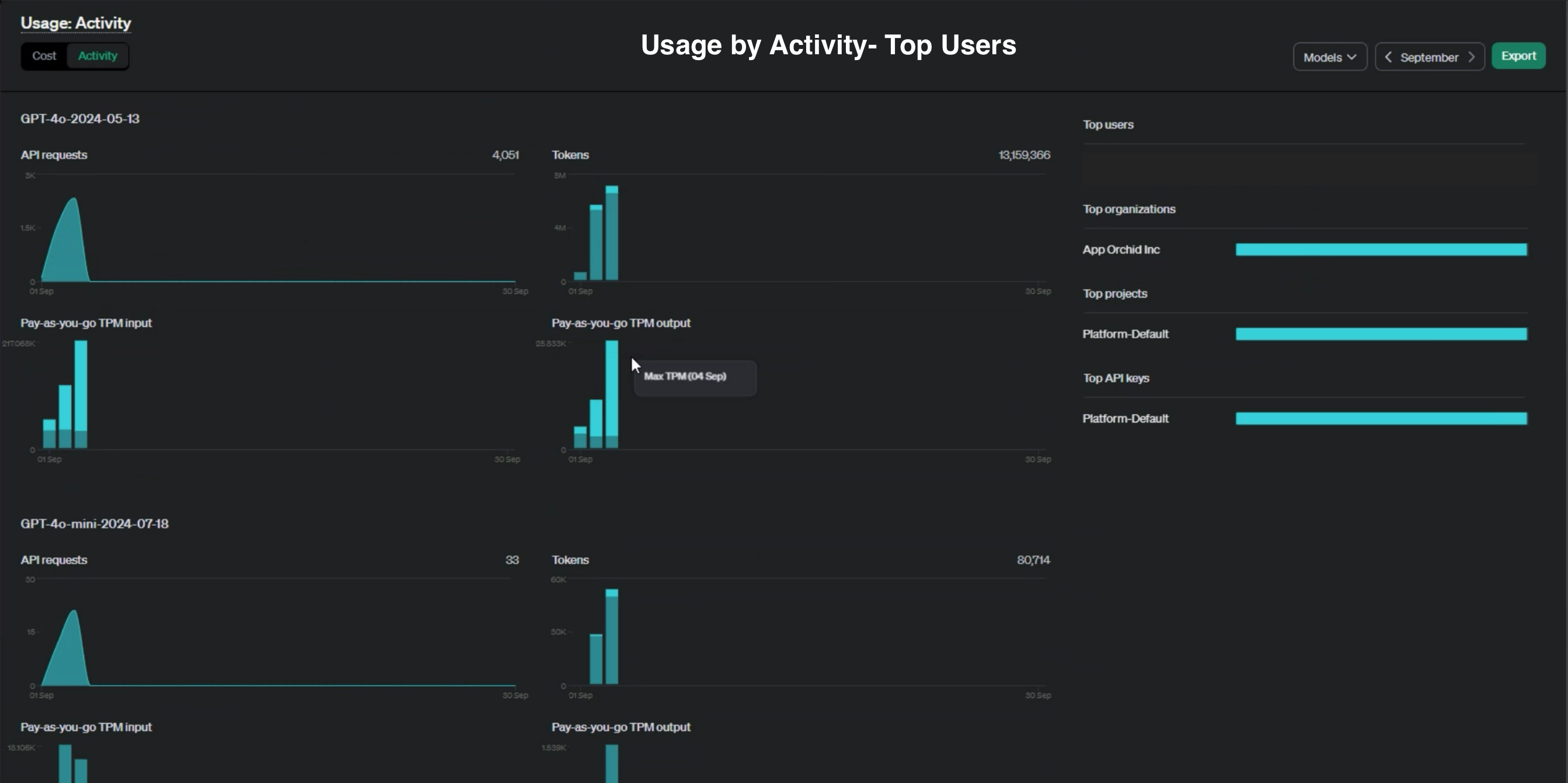

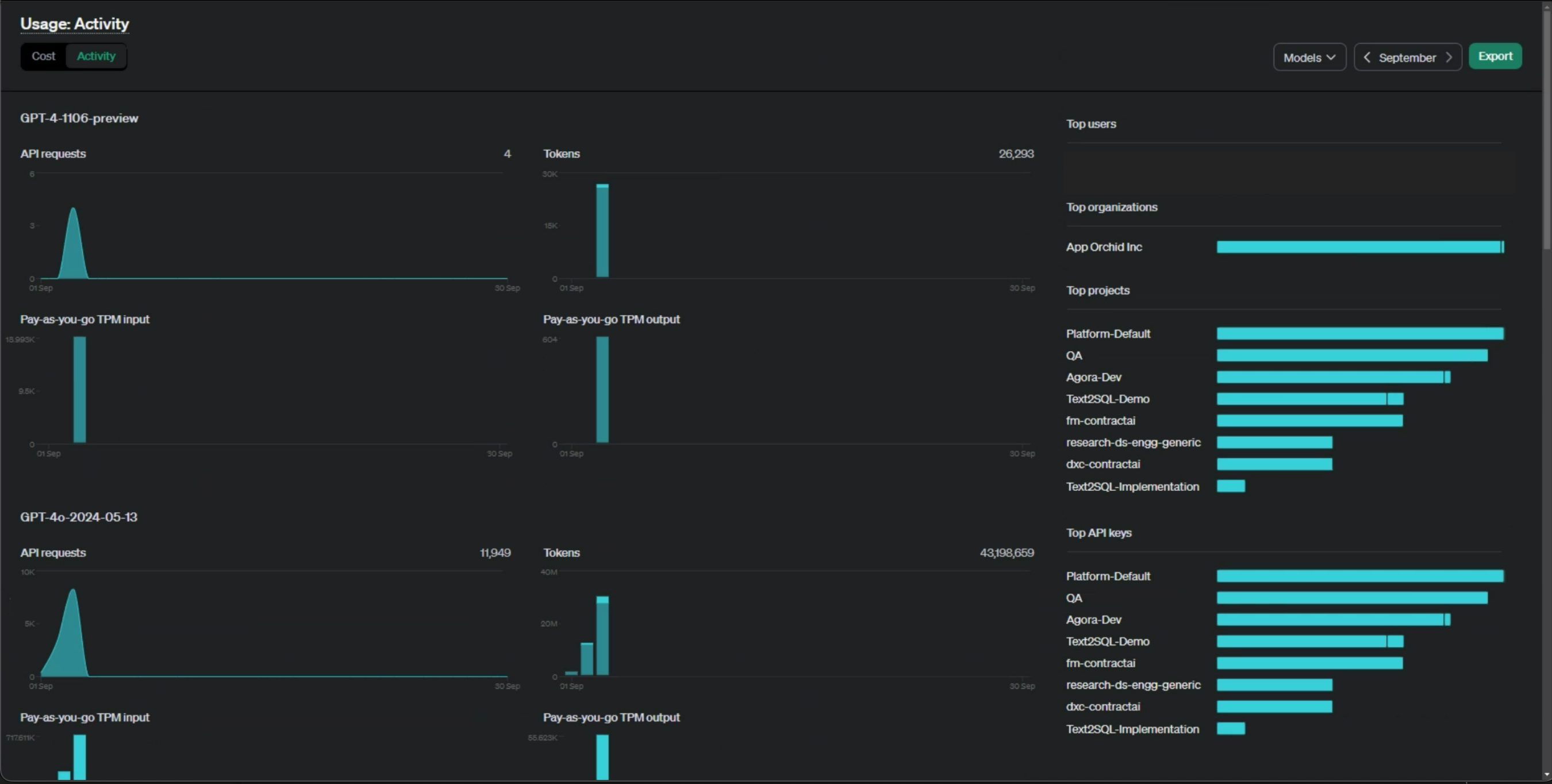

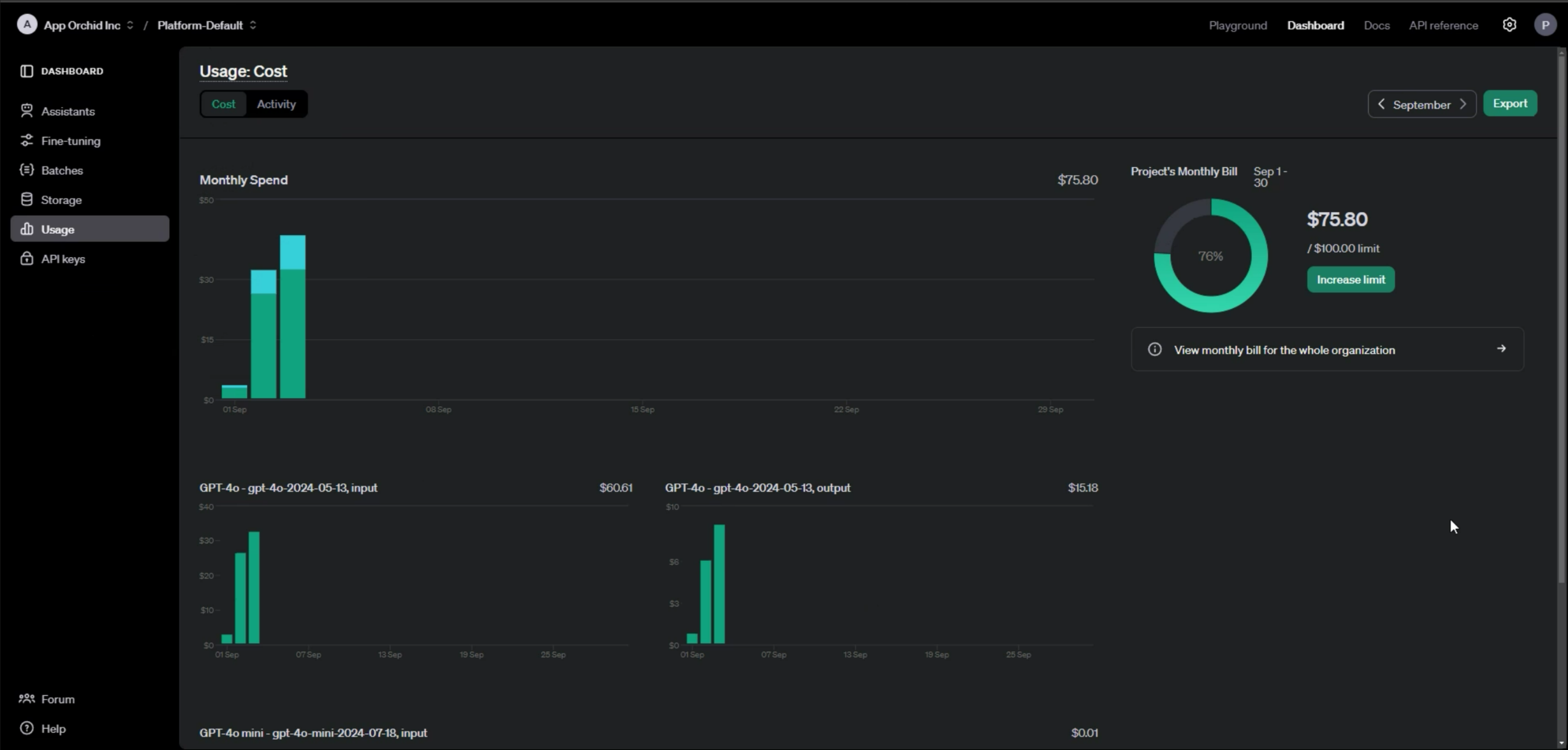

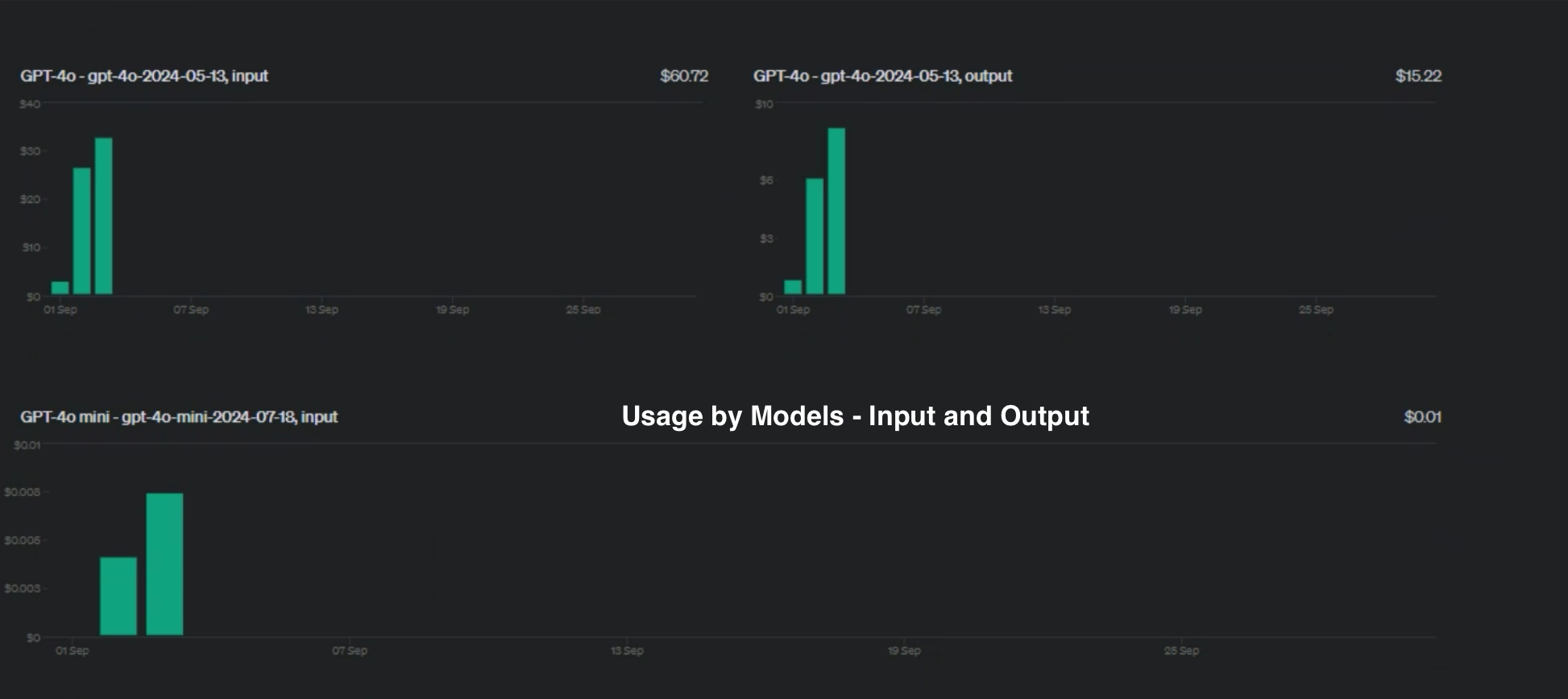

With the integration of LLM, specifically using OpenAI's GPT-4, understanding and managing associated costs is crucial. Usage and cost management are primarily overseen by OpenAI, which provides a comprehensive framework for monitoring and controlling consumption. OpenAI tracks usage through various metrics:

By Model: Tracks the frequency and intensity of each model's use, providing insights into associated costs.

By User: Monitors individual user activity, offering a detailed view of usage patterns.

By Organization: Aggregates usage data at the organizational level, allowing a broader perspective on overall consumption.

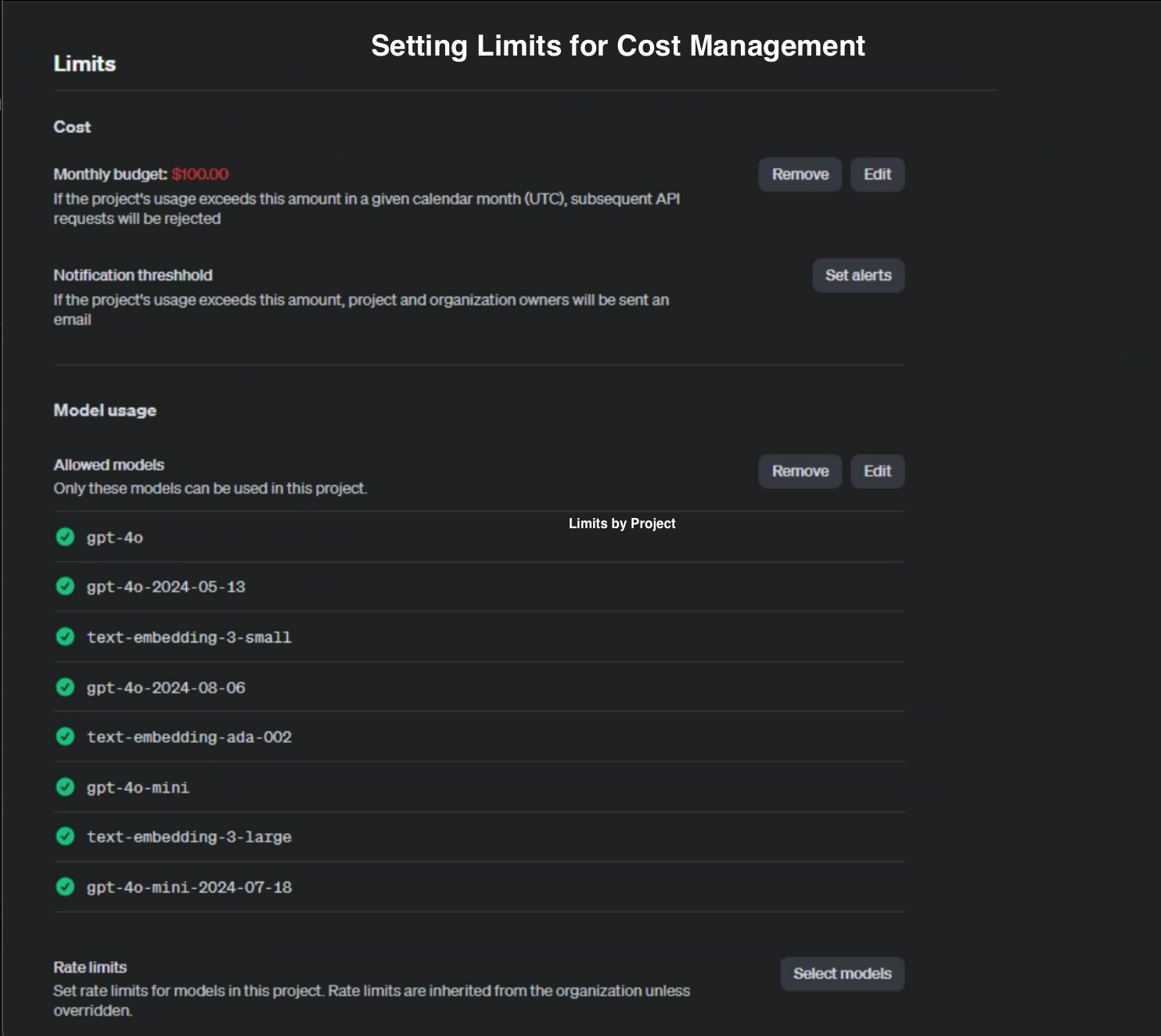

To manage costs effectively, OpenAI offers several mechanisms:

Setting Usage Limits: Organizations can set hard or soft limits to prevent unexpected cost overruns.

Budget Alerts: Alerts have been configured to notify users when usage approaches predefined thresholds.

API Throttling: The rate of API calls can be adjusted to control costs by limiting the frequency of high-cost operations.

Usage Cost Sample

Key Considerations

To manage costs effectively for LLM-based features in Easy Answers and Contract AI, it is essential to track usage and activity across three key areas:

Input Question: The number of questions posed to the LLM, as this directly impacts usage costs.

Output Response: The length and complexity of the responses generated by the LLM can influence the overall cost.

Length of the Context: The extent of context-based conversations per user interaction, as more extended interactions may lead to increased usage.

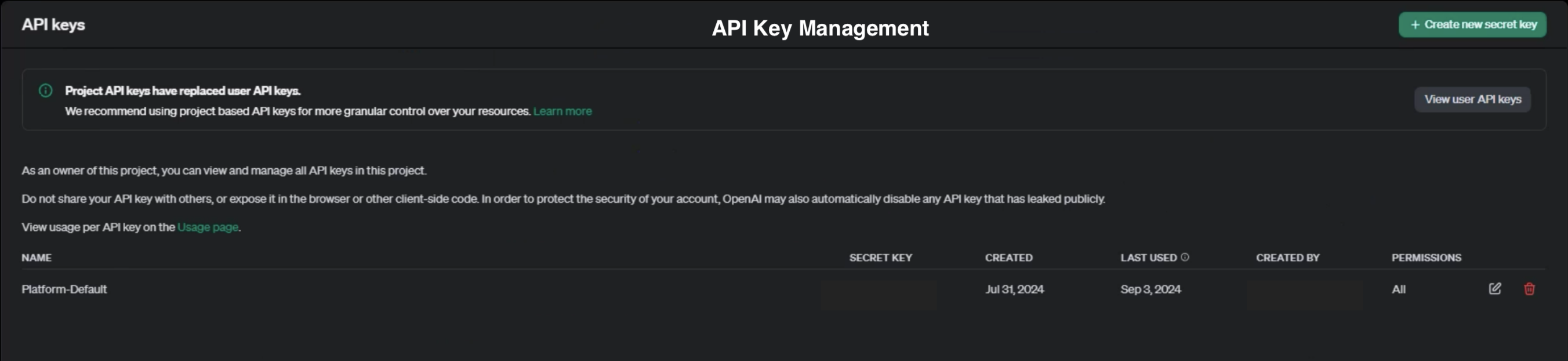

Project Management and Key Assignment

For effective management of individual customer projects, we assign one API key per project. While multiple keys can be allocated to a single project, each key is exclusive to that project and cannot be shared across multiple projects. This approach ensures precise tracking and management of usage and costs on a per-project basis, enabling more accurate monitoring and control of expenses. Additionally, you can limit the models accessible by each key, ensuring that only specified models are used within a given project. By closely monitoring these aspects and utilizing OpenAI's cost management tools, customers can optimize their use of LLM features and maintain tight control over project-specific expenses.